[GS-03-021] Balancing Data Access. Security, and Privacy

Balancing data access, security, and privacy is a significant challenge in the digital environment. This entry examines the conflicts among these elements, which enable innovation but create vulnerabilities. Open data access fuels advancements in tools like navigation apps and AI systems but risks breaches, as seen with ransomware targeting cloud systems. Data privacy laws aim to protect autonomy but can hinder progress, while emerging cyberthreats, such as quantum computers cracking encryption, erode public trust. Technological solutions offer potential solutions: homomorphic encryption enables secure data computations, federated learning preserves privacy in AI training, and differential privacy shields individual identities in analytics. Decentralized systems empower users, and AI-driven security detects threats effectively, though scalability and ethical concerns persist. Real-world applications, from healthcare collaborations to smart city planning, demonstrate how these technologies align these elements, fostering innovation without compromising safety. Emerging trends, such as secure multi-party computation and personal data vaults, offer improved control, but ethical issues, including surveillance risks, require careful consideration. This enrty identifies strategies to balance these elements, supporting innovation while protecting trust, autonomy, and ethical responsibility.

Tags

Author & citation

Li, H. and Kwan, M.-P. (2025). Balancing Data Access, Security, and Privacy. The Geographic Information Science & Technology Body of Knowledge (Issue 2, 2025 Edition), John P. Wilson (Ed.). DOI: 10.22224/gistbok/2025.2.4.

Explanation

- Introduction

- Tensions in the Data Triad

- Technological Solutions for Balance

- Real-World Applications

- Future Horizons

1. Introduction

Consider a fitness app that offers personalized workout plans but requires access to location, photos, and contacts, raising concerns about data usage. Data drives innovations like AI assistants and smart cities, but misuse leads to breaches, surveillance, and distrust (Lehtiö et al., 2023). Balancing data access, security, and privacy is complex: open access supports innovation but increases exposure risks, strict security may reduce usability, and stringent privacy regulations can limit societal benefits. Geographic data, including coordinates and implicit references such as place names, enhances location-based services but increases re-identification risks by revealing personal movement patterns (Ye et al., 2025). These dilemmas are not just technical but also ethical, involving trade-offs between individual rights and societal gain. This entry examines the conflicts, solutions, and future implications of these elements, integrating practical examples with recent advancements. It examines how technologies address vulnerabilities, how real-world applications achieve balance, and what lies ahead as society navigates ethical challenges. This entry identifies approaches that enable data access to drive innovation, ensure security against threats, and protect privacy, fostering trust in technology.

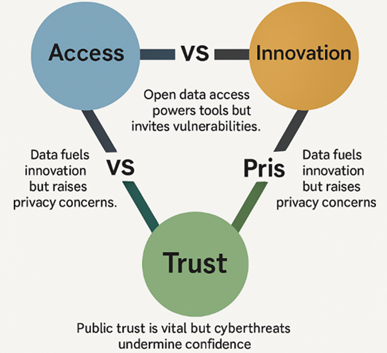

2. Tensions in the Data Triad

The interplay of data access, data security, and data privacy creates conflicts that challenge technologists and policymakers. These tensions, between enabling innovation and preventing harm, shape the digital ecosystem. This section outlines three core conflicts to understand why balance is elusive yet essential (Figure 1).

2.1 Access vs. Protection

Open data access powers tools like navigation apps and medical analytics, but it invites vulnerabilities. For example, unrestricted data systems are accessible but prone to exploitation. Ransomware exploits cloud systems, targeting accessible datasets.

2.2 Innovation vs. Privacy

Data fuels innovation, but data privacy laws like

2.3 Trust vs. Threats

Public trust erodes as cyberthreats evolve, threatening both data security and data privacy. Quantum computers could crack traditional

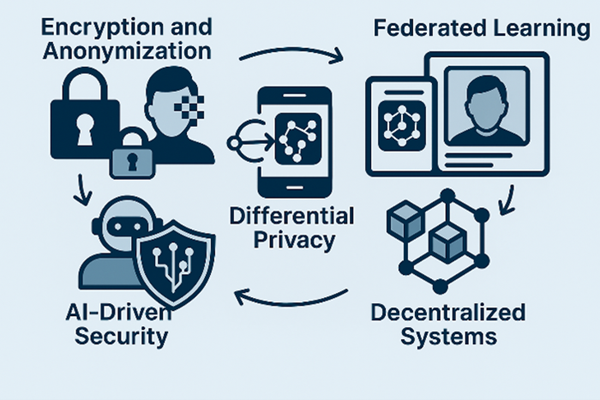

3. Technological Solutions for Balance

To resolve these tensions, technologies are emerging to align data access, data security, and data privacy. By addressing vulnerabilities while enabling innovation, they offer paths to equilibrium. This section details five key advancements reshaping the digital landscape (Figure 2).

3.1 Encryption and Anonymization

Encryption locks data behind a key, ensuring secure storage. End-to-end versions secure communications, ensuring only intended recipients access messages. Homomorphic encryption advances this by allowing computations on encrypted data—such as medical records—without unlocking it, preserving data privacy (Ci et al., 2025). Anonymization strips identifiers from datasets, but re-identification risks persist, spurring stronger methods. These tools enhance data security, preventing unauthorized access, and support data privacy by limiting exposure. Yet, they must avoid overly restricting data access, as complex encryption can slow legitimate use. These techniques protect sensitive information while enabling secure sharing, addressing the access-protection conflict.

3.2 Federated Learning

Federated learning trains AI on local devices, like phones, without centralizing raw data (Mohammadi et al., 2024). For example, it shares model updates without transferring user data. It powers apps like traffic predictors, accessing insights while keeping user details private. This preserves data privacy, supports innovation through data access, and enhances data security by minimizing data transfers. However, its high computational demands limit smaller organizations, hindering scalability. Federated learning addresses the innovation-privacy conflict by supporting AI development while protecting autonomy, though scalability requires improved infrastructure. Its development offers a model for privacy-conscious innovation that supports user trust.

3.3 Differential Privacy

3.4 Decentralized Systems

Decentralized systems, like blockchain, spread data across networks, eliminating single failure points (Chen et al., 2024). For example, data is shared across peers rather than stored centrally. Such systems secure records, such as voting data, enhancing security and privacy by empowering users. They enable authorized data access while reducing breach risks, addressing access-protection concerns. Scalability remains a challenge, as networks demand significant resources. Yet, decentralized systems counter the trust-threats tension by minimizing centralized vulnerabilities, rebuilding confidence. As infrastructure improves, they promise user-centric models that balance accessibility with autonomy, ensuring data serves innovation without compromising safety, a critical step toward trusted ecosystems.

3.5 AI-Driven Security

AI plays a critical role in anomaly detection for cyber defense, spotting anomalies like unusual data patterns that signal hacks. It strengthens data security by detecting ransomware effectively, protecting accessible systems. Yet, AI-driven attacks, like falsified signals, challenge data privacy (Hoang et al., 2024). This dual role complicates the trust-threats tension, as AI enhances and threatens security. Balancing its power requires constant innovation to stay ahead of malicious uses, ensuring data access remains safe. AI-driven defenses mitigate vulnerabilities while supporting usability, but require ongoing vigilance. Refining AI’s protective capabilities helps maintain trust, securing data while enabling access, and addressing these conflicts.

4. Real-World Applications

Practical cases show how data access, data security, and data privacy can align, addressing tensions through technology. This section explores three examples, illustrating successes and challenges in diverse settings.

4.1 Healthcare Collaboration

Healthcare depends on data for research, but breaches erode trust. A global consortium uses federated learning to analyze cancer patterns across clinics without sharing raw patient records (Pati et al., 2022). Researchers access insights, advancing treatments, while data privacy ensures anonymity. Data security measures, like encrypted transfers, prevent leaks, and data access fuels innovation. This addresses the innovation-privacy conflict by supporting progress while protecting privacy. However, maintaining robust security demands ongoing vigilance to safeguard sensitive data. This example demonstrates how technology can balance these elements, enabling medical breakthroughs while protecting patients. It underscores the need for scalable solutions to expand such models globally, ensuring trust and functionality in data-driven healthcare.

4.2 Smart City Planning

Smart cities use geographic data, like location-based sensors and GIS mapping, to optimize traffic and air quality, but tracking raises

4.3 Disaster Management with GIS

Geographic data is critical in disaster management, where real-time location data informs evacuation routes and resource allocation (Goodchild and Glennon, 2010). For example, during hurricanes, GIS platforms integrate satellite imagery and location-based data to map flood risks, but this raises geoprivacy concerns. Differential privacy protects individual coordinates while enabling spatial analysis, and homomorphic encryption secures data sharing among agencies. This balances data access for rapid response with privacy protection, addressing the innovation-privacy conflict. Scalability remains a challenge due to real-time processing demands, but such systems demonstrate how geographic data can drive life-saving innovation while safeguarding autonomy

5. Future Horizons

Secure multi-party computation enables private data analysis, boosting efficiency in logistics. By 2025, regulations, such as the EU AI Act updates, will mandate transparency, reinforcing data privacy. Personal data vaults will let users control access, shifting power dynamics and enhancing data security. Post-quantum cryptography will mature, countering quantum threats to ensure long-term protection, addressing the trust-threats tension. Meanwhile, brain-computer interfaces will raise new challenges, as neural data demands robust privacy measures. These trends favor systems that balance data access with safeguards, enabling innovation while fostering trust. As federated learning and differential privacy expand, they will enable applications in areas like smart grids and personalized medicine. The future hinges on integrating these tools to maintain usability, security, and autonomy, ensuring data empowers without vulnerability.

References

- Chen, Y., Arkin, J., Zhang, Y., Roy, N., Fan, C. (2024). Scalable Multi-Robot Collaboration with Large Language Models: Centralized or Decentralized Systems?, in: 2024 IEEE International Conference on Robotics and Automation (ICRA). Presented at the 2024 IEEE International Conference on Robotics and Automation (ICRA), pp. 4311–4317.

- Ci, S., Hu, S., Guan, D., Koç, Ç.K. (2025). Privacy-preserving word vectors learning using partially homomorphic encryption. Journal of Information Security and Applications 89, 103999.

- Goodchild, M. F., & Glennon, J. A. (2010). Crowdsourcing geographic information for disaster response: a research frontier. International Journal of Digital Earth, 3(3), 231–241.

- Hoang, V.-T., Ergu, Y.A., Nguyen, V.-L., Chang, R.-G. (2024). Security risks and countermeasures of adversarial attacks on AI-driven applications in 6G networks: A survey. Journal of Network and Computer Applications 232, 104031.

- Lee, D.-H., Sun, L., Erath, A., (2012). Study of bus service reliability in Singapore using fare card data. ETH Zurich Conference Presentation.

- Lehtiö, A., Hartikainen, M., Ala-Luopa, S., Olsson, T., Väänänen, K. (2023). Understanding citizen perceptions of AI in the smart city. AI & SOCIETY 38, 1123–1134.

- Lundberg, I., Narayanan, A., Levy, K., Salganik, M.J. (2019). Privacy, Ethics, and Data Access: A Case Study of the Fragile Families Challenge. Socius 5, 2378023118813023

- Luo, Y., Hu, T., Ouyang, X., Liu, J., Fu, Q., Qin, S., Min, Z., Lin, X. (2025). DPO-Face: Differential privacy obfuscation for facial sensitive regions. Computers & Security 154, 104434.

- Menard, P., and Bott, G.J., (2025). Artificial intelligence misuse and concern for information privacy: New construct validation and future directions. Information Systems Journal 35, 322–367.

- Mohammadi, S., Balador, A., Sinaei, S., and Flammini, F. (2024). Balancing privacy and performance in federated learning: A systematic literature review on methods and metrics. Journal of Parallel and Distributed Computing 192, 104918.

- Pati, S., Baid, U., Edwards, B., Sheller, M., et al. (2022). Federated learning enables big data for rare cancer boundary detection. Nature Communications 13, 7346.

- Yalamuri, G., Honnavalli, P., and Eswaran, S. (2022). A Review of the Present Cryptographic Arsenal to Deal with Post-Quantum Threats. Procedia Computer Science 215, 834–845.

- Ye, X., Yigitcanlar, T., Goodchild, M., Huang, X., Li, W., Shaw, S. L., … Newman, G. (2025). Artificial intelligence in urban science: why does it matter? Annals of GIS, 31(2), 181–189.

Learning outcomes

-

1922 - Analyze the key tensions between data access, security, and privacy in real-world scenarios (e.g., healthcare AI, smart cities)

Analyze the key tensions between data access, security, and privacy in real-world scenarios (e.g., healthcare AI, smart cities)

-

1923 - Evaluate emerging technologies (federated learning, differential privacy) for their ability to balance the data triad

Evaluate emerging technologies (federated learning, differential privacy) for their ability to balance the data triad

-

1924 - Propose ethical frameworks for data governance that address innovation needs while protecting individual rights

Propose ethical frameworks for data governance that address innovation needs while protecting individual rights